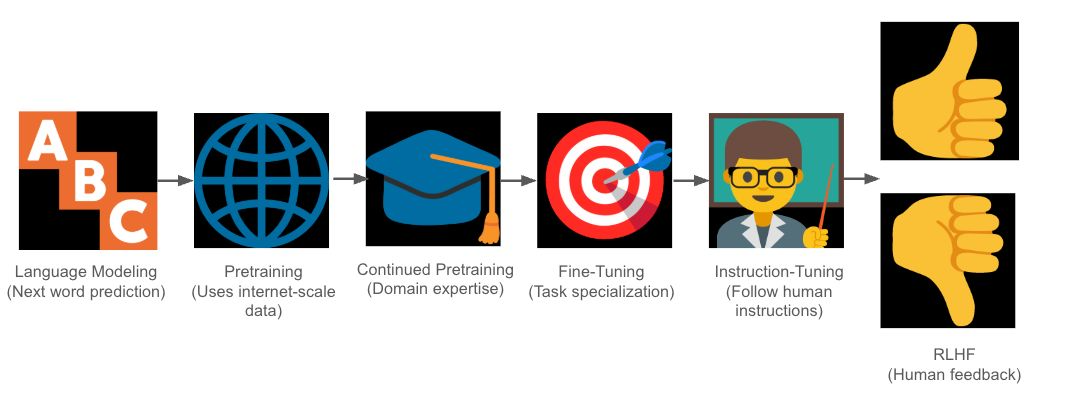

We are going to begin by defining essentially the most basic constructing block of LLMs: Language modeling, which dates again to early statistical NLP strategies within the Eighties and Nineties and was later popularized with the arrival of neural networks within the early 2010s.

In its easiest type, language modeling is actually about studying to foretell the following phrase in a sentence. This job, often known as next-word prediction, is on the core of how LLMs be taught language patterns. The mannequin accomplishes this by estimating the chance distribution over sequences of phrases, permitting it to foretell the chance of any given subsequent phrase based mostly on the context supplied by the previous phrases.